A survey of machine learning in credit risk

A survey of machine learning in credit risk

ABSTRACT

Machine learning algorithms have come to dominate several industries. After decades of resistance from examiners and auditors, machine learning is now mov- ing from the research desk to the application stack for credit scoring and a range of other applications in credit risk. This migration is not without novel risks and challenges. Much of the research is now shifting from how best to make the mod- els to how best to use the models in a regulator-compliant business context. This paper surveys the impressively broad range of machine learning methods and appli- cation areas for credit risk. In the process of that survey, we create a taxonomy to think about how different machine learning components are matched to create spe- cific algorithms. The reasons for where machine learning succeeds over simple linear methods are explored through a specific lending example. Throughout, we highlight open questions, ideas for improvements and a framework for thinking about how to choose the best machine learning method for a specific problem.

Keywords: machine learning; artificial intelligence; credit risk; credit scoring; stress testing.

1 INTRODUCTION

The greatest difficulty in writing a survey of machine learning (ML) in credit risk is the extraordinary volume of published work. Just in the area of comparative analyses of ML applied to credit scoring, dozens of papers can be found. The goal of this survey cannot be to index all work on ML in credit risk. Even listing all of the worthy papers is beyond the attainable scope.

Rather, this survey seeks to identify the major methods being used and developed in credit risk and to document the breadth of application areas. Most importantly, this paper seeks to provide some intuitive insights as to why certain methods work in specific areas. When does ML work better than linear methods only because it is a quicker path to an answer, and when does it instead discover something about the problem that is undiscoverable with traditional methods? Further, as a result of this research we hope to identify some areas of investigation that could be fruitful but have not yet been fully explored.

In attempting to provide a balanced view of the state of ML, some passages herein may take a tone that suggests ML is “much ado about nothing”. In other passages we are clearly singing the virtues of ML, particularly when discussing ensemble methods for robustness, deep learning to analyze alternate data and techniques for modeling the smallest data sets. ML can be seen to be clearly successful in some cases and disturbingly overblown in others, bringing new innovations in important areas and in some cases painfully rediscovering old methods, and overall it has made significant strides toward mainstream application while still having major challenges to overcome.

The structure of the paper is as follows. Section 2 gives a definition of ML intended, in part, to limit the scope of this survey to a manageable breadth, while Section 3 offers a modeling taxonomy based on defining data structures, architectures, estimators, optimizers and ensembles. From this perspective, much ML research is a human-based search of the metadesign space of what happens when you mix and match among those categories. Section 4 discusses the many application areas within credit risk and some of the model approaches found within each. Section 5 reviews a specific example of testing many ML algorithms to illustrate their differences relative to traditional methods, while Section 6 discusses the significant challenges in creating ML models and using them in business contexts. Section 7 pulls these thoughts together to highlight areas where future comparative studies could provide significant value to practitioners.

2 WHAT IS MACHINE LEARNING?

The greatest difficulty in writing a survey of machine learning (ML) in credit risk is the extraordinary volume of published work. Just in the area of comparative analyses of ML applied to credit scoring, dozens of papers can be found. The goal of this survey cannot be to index all work on ML in credit risk. Even listing all of the worthy papers is beyond the attainable scope.

Rather, this survey seeks to identify the major methods being used and developed in credit risk and to document the breadth of application areas. Most importantly, this paper seeks to provide some intuitive insights as to why certain methods work in specific areas. When does ML work better than linear methods only because it is a quicker path to an answer, and when does it instead discover something about the problem that is undiscoverable with traditional methods? Further, as a result of this research we hope to identify some areas of investigation that could be fruitful but have not yet been fully explored.

In attempting to provide a balanced view of the state of ML, some passages herein may take a tone that suggests ML is “much ado about nothing”. In other passages we are clearly singing the virtues of ML, particularly when discussing ensemble methods for robustness, deep learning to analyze alternate data and techniques for modeling the smallest data sets. ML can be seen to be clearly successful in some cases and disturbingly overblown in others, bringing new innovations in important areas and in some cases painfully rediscovering old methods, and overall it has made significant strides toward mainstream application while still having major challenges to overcome.

The structure of the paper is as follows. Section 2 gives a definition of ML intended, in part, to limit the scope of this survey to a manageable breadth, while Section 3 offers a modeling taxonomy based on defining data structures, architectures, estimators, optimizers and ensembles. From this perspective, much ML research is a human-based search of the metadesign space of what happens when you mix and match among those categories. Section 4 discusses the many application areas within credit risk and some of the model approaches found within each. Section 5 reviews a specific example of testing many ML algorithms to illustrate their differences relative to traditional methods, while Section 6 discusses the significant challenges in creating ML models and using them in business contexts. Section 7 pulls these thoughts together to highlight areas where future comparative studies could provide significant value to practitioners.

We tend to think of statistical models and linear methods as something other than ML, and yet “simple” linear regression can take on unbounded complexity through factor variables, spline approximations, interaction terms and massive numbers of descriptive input variables via dimension reduction methods such as singular value decomposition. At the heart of many ML algorithms are search or optimization methods that were pioneered decades or centuries ago in other contexts. Bagging, boosting and random forests hark back to earlier work on ensemble methods (Clemen 1989; Opitz and Maclin 1999).

Harrell (2019) proposes a distinction between statistical modeling and ML.

- Uncertainty. Statistical models explicitly take uncertainty into account by specifying a probabilistic model for the data.

- Structural. Statistical models typically start by assuming the additivity of predictor effects when specifying the model.

- Empirical. ML is more empirical, allowing for high-order interactions that are not prespecified, whereas statistical models have identified parameters of special interest.

The above items carry other implications. For example, search-based methods such as Monte Carlo simulations, genetic algorithms and various forms of gradient descent usually do not provide confidence intervals for the parameters, and correspondingly are typically considered as ML. Ensemble methods in which multiple models are combined are generally considered to be ML, even when the constituent models are statistical. It could also be said that traditional statistical methods rely on analyst selection of input features and interaction terms, whereas ML methods emphasize algorithmic selection of features, discovery of interaction terms and even creation of features from raw data.

Drawing the line between ML and “traditional modeling” is challenging for even the best scientific linguist. Practically speaking, ML seems like it should include models that emphasize nonlinearity, interactions and data-driven structures, and exclude simple additive linear methods with moderate numbers of inputs. The distinction may be more in the specific application than the method used. For example, an artificial neural network could be dumbed down to a nearly linear adder, and common logistic regression can incorporate almost all the learnings from a sophisticated ML algorithm through artful use of binning, interaction terms and segmentation.

Some methods might be viewed as intermediates, like transitional species in evolution. (The author recognizes that “transitional species” is a misnomer in evolutionary taxonomy, but the perspective is not inappropriate here.) Forward stepwise regression or backward stepwise regression automate feature selection while being statistically grounded. Principal components analysis (PCA) is an inherently linear, statistical method of dimensionality reduction via eigenvalue estimation, whereas other dimensionality reduction methods lean much more toward ML. One of the greatest strengths of neural networks is that they can be used as nonlinear dimensionality reduction algorithms.

Within this attempted dichotomy, many ML techniques are rapidly taking on statistical rigor. This maturing process is what we see in any field where rapid advances are followed by a team of scientists filling in theoretical and technical details.

Many of the most public successes of ML are coupled with “big data”: massive data sets that allow equally massive parameterizations of the problem, so that the optimal transformations of the inputs and the optimal dimensionality reduction are learned from the data rather than via human effort. However, ML should not be viewed as synonymous with big data. Some ML methods appear well suited to very thin data sets where even linear regression struggles. Eventually, as we move into truly human-style artificial intelligence, the ability to learn from a single event in the context of a “physical” model of the world would show the power of ML with the smallest of data sets.

In credit risk, we are often stuck with small data sets. This was observed in the credit scoring survey by Lessmann et al (2015), in which only 5 of the 48 papers surveyed had 10 000 or more accounts to test: quite small samples compared with the big data headlines, but this is often the reality of credit risk modeling. For many actual portfolios, number of accounts > loss rate > very few training events. Even in subprime consumer lending, where loss rates are higher, only the largest lenders have had the data sets needed to apply the most data-hungry techniques like deep learning, or so it seems. However, ML is succeeding in credit risk modeling even on smaller data sets, apparently by emphasizing robustness and simpler interactions as opposed to the extreme nonlinearities in big-data contexts such as image processing (Krizhevsky et al 2012), voice recognition (Graves et al 2006) and natural language processing (Collobert and Weston 2008).

While ML is generating successes in credit risk, these are less dramatic in well-worn domains like prime mortgages. The biggest wins appear to be in niche products, alternate channels serving the underbanked (Abdulrahman et al 2014) and alternate data sources. A well-trained ML algorithm may be preprocessing deposit histories (ABA Banking Journal 2018), corporate financial statements, Twitter posts (Mengelkamp et al 2015), social media (Allen et al 2020; Bailey et al 2018; Freedman and Jin 2017) or mobile phone use (Bjorkegren and Grissen 2020; San Pedro et al 2015) to create input factors that eventually feed into deceptively simple methods such as logistic regression models.

We should also note that, in looking at applications of ML to credit risk, we must look beyond predicting probability of default (PD). One of the great early success stories of ML was in fraud detection (Ghosh and Reilly 1994). Anti-fraud (Zhou et al 2018), anti-money laundering (Awasthi 2012; Li et al 2020; Paula et al 2016; Tang and Yin 2005; Wang and Yang 2007) and target-marketing (Baesens et al 2002; Ling and Li 1998) are all applications that make heavy use of ML, but they are outside the boundaries we will draw here around credit risk applications. Still, we must consider applications to predicting exposure at default (EAD), recovery modeling, collections queuing and asset valuation, to name a few.

The following sections provide an introduction to the literature on ML methods, applications in credit risk, what makes ML work and the challenges with employing ML in credit risk.

3 MACHINE LEARNING METHODS

Providing an exhaustive list of ML methods is not feasible, particularly when we look beyond credit scoring to the broader applications of ML across credit risk mod- eling. One of the greatest challenges of creating any list of models is the difficulty in defining a model. The name given to a model typically represents a combina- tion of data structure, architecture, estimator, selection or ensemble process and more. Authors may swap out one estimator for another, or add ensembles on top and describe it as a new model. This abundant hybridization leads to an exponential growth in the literature and the number of model names. Finding the right combina- tion is, of course, very valuable, but the human search through this model component space with publications as measurement points is more than can be cataloged here.

In this section we will identify key sets of available components behind the models and then categorize some of the most studied models according to the components used. Of course, each of these lists can never be complete. They are intended only to be representative.

3.1 Data structures

Choosing a data structure is the first step in either statistical modeling or ML. That model must be chosen to align with the data being modeled. A range of target variables are possible in credit risk, and those variables can be observed with different frequencies and aggregation, depending on the business application.

Table 1 lists some of the outputs a researcher might wish to model in the domain of credit risk. Items such as preprovision net revenue (PPNR) (Liu et al 2018) and prepayment (Schwartz and Torous 1989) might not seem like credit risk tasks, but when they are modeled independently from credit risk the result can be conflicting predictions leading to nonsensical financial projections. Taking a consistent, coordinated perspective of all account outcomes and performance, as in competing-risk architectures and models (Fine and Gray 1999; Prentice 1978), is the best hope of predicting pricing and profitability.

Even deposit modeling can leverage very similar methods, and works best when a total customer view is taken. Deposit balances are a potentially valuable input to credit risk models, but are not always categorized as credit risk targets. Anti-fraud,

anti-money laundering and target marketing are considered as separate from credit risk because they are not part of the analysis of an active customer relationship, although even here the boundaries are weak.

For any target to be modeled, a decision must be made as to the aggregation level and performance to be predicted. Table 2 lists the most common answers. Each type of data usually has a corresponding literature. Econometric models (Enders 2014; Wei 1990) focus on time series data, for either a portfolio or segments therein; age–period–cohort (APC) models (Fu 2018; Glenn 2005; Mason and Fienberg 1985) are applied to vintage performance time series; survival models (Hosmer et al 2008; Therneau and Grambsch 2000) and panel data models (Hsiao 2014; Wooldridge 2010) are applied to account performance time series; and the large literature on credit scoring (Anderson 2019; Thomas et al 2017) focuses mostly on account outcomes, using a single binary performance indicator for each account.

By starting the discussion with target variables, what follows is immediately focused on supervised learning. The assumption is that unsupervised learning techniques might be used to create input factors. Many forms of dimensionality reduction and factor creation can be conducted using unsupervised methods. PCA and most segmentation methods can be considered unsupervised learning. However, a credit risk model will ultimately always finish with a supervised learning technique.

3.2 Architectures

Once the problem is stated as a target variable to be predicted, and its data structure as in Tables 1 and 2, an architecture must be chosen for the problem (see Table 3). This is the point where the distinction between traditional methods and ML can appear.

In Table 3 “additive effects” refers to regression approaches (Harrell 2015; Hilbe 2009). “Additive fixed effects” includes the use of fixed effects (dummy variables), again in a regression approach, eg, a panel model approach.

State-transition models (Bangia et al 2002; Nickell et al 2000) (also known as grade, rating or score-migration models depending on whether they are applied to delinquency states, risk grades, agency ratings or credit scores) are all variations on Markov chains (Norris 1998). Roll-rate models (Federal Deposit Insurance Corporation 2007) capture the net forward transition of a state-transition model and are used throughout credit risk modeling. Generally, this architecture involves identifying a set of key intermediate states and modeling the transitions between those states and to the target state. Usually the target is a terminal state like charge-off or payoff.

Going beyond the above architectures leads further into the realm of ML, although again there are few fixed boundaries. Convolutional networks (Krizhevsky et al 2012), feedforward networks (Angelini et al 2008; Tam and Kiang 1992) and recurrent neural networks (RNNs) (Lipton et al 2015) are all kinds of artificial neural networks and are just a few of the many structures being tested in credit risk applications.

Whenever the nonlinearity of a problem exceeds the flexibility of the underlying model, segmenting the analysis is a common solution. The more nonlinear the base model, the less segmentation is required. Traditional logistic regression models may actually be a collection of many separate regression models applied to different segments, whereas a neural network or decision tree may use a single model.

Some models are themselves segmentation engines. Methods such as support vector machines (SVMs) (Vapnik 2013) use hyperplanes or other structures to segment the parameter space. Decision trees (Quinlan 1986) can also be viewed as a high-dimensional segmentation technique and are employed in a variety of ML approaches. Nearest neighbor methods (Cover and Hart 1967; Henley and Hand 1996) are difficult to classify in this architectural taxonomy but seem closer to these approaches than to other categories.

Fuzzy rules are used to capture uncertainty directly in the forecasting process (Mochon et al 2008) and are often combined with other methods (Piramuthu 1999). Rough sets (Pawlak 1982) can be seen as having a similar objective of considering the vagueness and imprecision of available information, but do so using a different theoretical framework.

RNNs are used primarily to model time series data. By making the forecast from one period an input to the network for the next period, they are effectively a nonlinear version of vector autoregressive moving average (ARMA) models, also known as multivariate Box–Jenkins models (Li and McLeod 1981; Mauricio 1995). Long short-term memory (LSTM) networks apply a specific architecture to the RNN framework in order to scale and refine the use of memory in the forecasting.

Overall, many architectures can be used in time series forecasting. The same lagged inputs used in linear distributed-lag models (Almon 1965; Zanobetti et al 2000) can be used as inputs to ML methods. To reduce the dimensionality of the problem and aid visualization, optimal state-space reconstruction, also known as the method of delays, can be used (Breeden and Packard 1994; Kugiumtzis 1996; Packard et al 1980; Sauer et al 1991).

The application of convolutional neural networks (CNNs) to consumer transaction data (Kvamme et al 2018) seems distant from the leading applications of CNNs in image processing, but there will likely be many more applications of CNNs in credit risk, particularly with recent advances incorporating rotational (Cheng et al 2016; Dieleman et al 2015) and other symmetry transformations to increase the generalization power of CNNs.

Not shown is the list of possible inputs, because this would be too extensive.

3.3 Estimators and optimizers

The primary purpose of this modeling taxonomy is to illustrate that, for example, a genetic algorithm is not a model. Practitioners, both experienced and novice, often use sloppy terminology, confusing data structures, architectures and optimizers. Here we illustrate that many different estimators and optimizers can be applied in an almost mix-and-match fashion across the range of architectures. By clearly identifying the components of a model, researchers can find opportunities for creating useful hybrids.

The literature also attempts to carefully distinguish between estimators and optimizers. In simple terms, estimators all rely on a statistical principle to estimate values for the model’s parameters, usually with corresponding confidence intervals and statistical tests in the traditional statistical framework. Optimizers generally follow an approach of specifying a fitness criterion to be optimized. As parameter values are changed the fitness landscape can be mapped. Each optimizer follows a specific search strategy across that fitness landscape. Of course, here again it can be difficult to draw clear lines between these categories, as estimators and optimizers can each take on properties of the other.

Tables 4 and 5 list some of the many methods used to estimate parameters or even metaparameters (architectures) of a model. Items such as backpropagation are specific to a certain architecture, eg, backpropagation as a way to revise the weights of a feedforward neural network. Most, however, can be applied creatively across many architectures for a variety of problems.

Maximum likelihood estimation is the dominant statistical estimator, and underlies, for example, the logistic regression estimation that is ubiquitous in scoring and many other contexts. Least squares estimation predated maximum likelihood but can be derived from it. Partial likelihood estimation was a clever efficiency developed for estimating proportional hazards models without estimating the hazard function parameters needed in the full likelihood function.

Aside from some deep philosophical issues, Bayesian methods are particularly favored when a prior is available to guide the solution. Markov chain Monte Carlo (MCMC) starts with a Bayesian prior distribution for the parameters and uses a Markov chain to step toward the posterior distribution given the data, somewhat like a correlated random walk.

In data-poor settings, Bayesian methods provide a powerful mechanism for combining expert knowledge from the analyst with available observations in order to obtain a more robust answer. Computing a batting average in baseball is an easy way to illustrate this. Someone who has never swung could be assumed to have a 50/50 chance of hitting the ball: a 0.500 average. After their first swing, a miss would take their batting average to 0.333 and a hit would take it to 0.667. With a maximum likelihood estimation, the best fit to the data would be 0.000 for a miss and 1.000 for a hit, which seems less helpful until more observations are acquired. This is Laplace’s rule of succession. Not coincidentally, Laplace also formulated Bayes’s theorem.

With method of moments, the moments of the distribution are expressed in terms of the model parameters. These parameters are then solved by setting the population moments equal to the sample moments.

Linear programming and quadratic programming are methods for incorporating constraints. Many other constrained optimization methods exist, such as Lagrange multipliers, which provide a mechanism for adjusting the fitness function to incorporate penalty terms.

Gradient descent can be accomplished via several specific algorithms, but it generally refers to computing the local gradient of the fitness landscape at a test point and stepping in the direction with the steepest slope, hopefully toward the desired minimum. Backpropagation is gradient descent in the context of a neural network, where the gradient is computed for each node’s parameters. Reinforcement learning is the more general concept of adjusting parameters, usually in a neural network context, based on new experiences. Kalman filters are an optimal update procedure for linear, normally distributed models, which could be thought of as a subset of reinforcement learning.

Genetic algorithms, evolutionary computation and genetic programming (GP) are all modeled on evolutionary principles. In an optimization setting, mutation operations with survivor selection are equivalent to stochastic gradient descent. Including crossover between candidates works if symmetries exist in the fitness landscape such that sets of parameters form a useful subsolution within the model.

Not shown are the many estimation methods developed to handle correlated input factors, such as the least absolute shrinkage and selection operator (lasso) (Tibshirani 1996) and ridge regression (Hoerl and Kennard 1970).

Of course, many of these concepts can be combined. Stochastic backpropagation and stochastic gradient descent (Bottou 2012) are widely used. Simulated annealing can be thought of as combining the stochastic gradient descent concept with the multiple candidate solutions approach of evolutionary methods. Bayesian methods can be combined with many other optimization approaches, such as Bayesian backpropagation (Buntine and Weigend 1991) or MCMC as described above.

3.4 Heterogenous ensembles

Ensemble modeling is actually a general technique that can combine forecasts from different model types. “Triangulation” has been a common technique over several decades for portfolio managers to create loss forecasts by comparing the outputs of several different models, each with different confidence intervals and known strengths and weaknesses. Voting is largely a formalization of what managers have been doing intuitively, with several interesting variations (Kuncheva 2002; Van Erp et al 2002).

Ensemble modeling (Clemen 1989; Dasarathy and Sheela 1979; Dietterich 2000; Opitz and Maclin 1999; Polikar 2006) was in use well before the burst of activity in ML, but quickly proved itself to be a valuable addition to almost any ML technique, particularly in credit risk (Wang et al 2011). Most research into ensemble modeling can be split between homogenous methods, in which multiple models of the same type are combined to create better overall forecasts, and heterogenous methods, in which any types of models can be combined. We also consider a third category of hybrid ensembles, in which two complementary model types are integrated via mechanisms more specific to the methods than in the generic heterogenous ensemble approaches.

For an ensemble to be more effective than the individual contributors, Hansen and Salamon (1990) showed that the individual models must be more accurate than random and the models must not be perfectly correlated. In other words, we cannot create useful forecasts from a collection of random models, and the best ensembles have constituents that have complementary strengths.

Ensemble modeling seems particularly well suited to credit risk because of the limited data sets typically available. Although the underlying dynamics can be quite complex and explainable, with a rich variety of observed and unobserved factors, the actual data available may support models of only limited complexity. Even though many factors can be important, issues of multicollinearity (Neeter et al 1985) can limit the modeler’s ability to include more than a few factors, and is often a deeper problem than is generally recognized (Breeden et al 2015; Goodhue et al 2011). Dimensionality reduction methods such as singular value decomposition, PCA (Jolliffe 2002) and projection pursuit (Friedman and Stuetzle 1981; Friedman and Tukey 1974; Huber 1985) are methods to address multicollinearity, but they do not address the sensitivity to outliers and overfitting questions as effectively as the full nonlinearity treatment available in ML.

The basic principle behind ensemble modeling is that different models can capture different aspects of the data. This can provide robustness to outliers and anomalies (Windeatt and Ardeshir 2004) as well as the choice of factors included in the modeling. Both theoretical (Hansen and Salamon 1990; Hsu 2017; Krogh and Vedelsby 1995) and empirical studies have shown that this diversity, when obtained for individually accurate predictors, has significant out-of-sample advantages.

Table 6 lists some of the methods used for combining forecasts in ensemble modeling of potentially heterogeneous models. Many of these methods were developed from the perspective of choosing from several possible categories (Van Erp et al 2002). In a broader credit risk context, we can have situations with binary outcomes, eg, default or not; multiple (categorical) outcomes, eg, transition to different states; or continuous outcomes, eg, forecasting a default rate.

Combining forecasts for binary events can be performed with several methods. Voting methods are the most common, where each constituent model gets one vote. Plurality voting is the simplest of these, where the outcome with the most votes is chosen. If the constituent models produce probabilities or some kind of fractional forecast, then each constituent model can divide its vote proportionally between the two outcomes, which are then summed. Classification methods can be modified to produce probabilities to facilitate their use more broadly (Kruppa et al 2013; Platt 1999). In the product rule, these fractional votes are multiplied, which means extremely confident models can dominate an outcome.

When predicting multiple possible outcomes (categorical outputs), the above methods can be generalized easily. In addition, majority voting is different from plurality voting, where one outcome must have a majority of the votes. If no outcome has a majority, the least favored outcome is removed and a majority is sought among the remaining outcomes. A runoff vote is a simple extension of the majority voting process until a single outcome remains.

Amendment voting starts with a majority vote between the first two candidate outcomes. The most favored is tested against the next candidate until one outcome remains. However, this procedure can be biased depending on the sequence of comparisons.

The Condorcet count performs pairwise comparisons of all outcomes. The favored outcome from each comparison receives one point and the outcome with the most points is chosen. Although complex, this has many favorable properties.

In Selfridge’s (1958) pandemonium method each model chooses one outcome, but that vote is stated with a confidence. These weighted votes are summed to choose a winner, meaning that model confidence intervals become important.

If the constituent models cannot assign a probability to all possible outcomes (as needed for the sum and product rules), but they can rank the outcomes, then ranked voting can be used. The outcome can be chosen by mean rank (de Borda 1781), median rank or a trimmed mean or median rank.

Single transferable vote also works from ranks, although not every model must rank every outcome. If one outcome has a majority of the top ranks, it is chosen. If not, the least preferred outcome is eliminated and the top ranks are reaggregated. The procedure continues until one outcome receives a majority.

Beyond voting, we could imagine creating a model of models. In a linear regression context, this does not introduce any new information beyond the initial estimate. However, with stacking (Wolpert 1992), the initial models are trained on a subset of the total data. Then a secondary model, often a linear regression one, is trained on the holdout sample, considering model accuracies and correlations. ML methods can also be used to create models of models (Todorovski and Dzeroski 2003).

One advantage of ensembles is the ability to create confidence measures for classification models, although direct, single-model approaches are also available (Provost and Domingos 2003).

For continuous-valued predictions, averages, medians, trimmed values and stacking all apply. Continuous forecasts are often – or should be, in best practice – accompanied by confidence intervals. Therefore, weighted averages or some method that incorporates those confidences would be preferable.

3.5 Homogeneous ensembles

Any method for combining heterogenous model predictions can of course be applied to homogenous models, where multiple models of the same type are built to be combined. However, some methods have been specifically designed to work with homogenous ensembles.

3.5.1 Bagging

Bootstrap aggregation (bagging) (Breiman 1996; Lee and Yang 2006; Liang et al 2011) is a simple process of subsampling the available training data with replacement. Considering the typically limited size of the training samples in credit risk, the subsets can be 75% or more of the available data. Bagging can be used with any model type, and the resulting forecasts can be combined as described for heterogeneous models, although the sum rule is used most often (Kittler et al 1998).

For random subspace modeling (Ho 1998), a random sample of the available input factors is drawn for each model. This could also be done in a sequentially deterministic fashion, where the strongest explanatory variable from the first model is excluded from the next model in order to find structure among other variables, and so forth. The first application of the technique was for creating decision trees, leading to the literature on random forecasts, but the technique is generic to any model type.

Rotation forests (Rodriguez et al 2006) follow the random forests idea, but all of the data is used each time with the axes rotated in the data space for a subset of input factors prior to building each model. This has the effect of testing many different projections for predictive ability.

Similar to the bagging concept is to use all of the training data each time, but different initial conditions for the parameter estimates. For model types such as neural networks (Clemen 1989) or decision trees (Ali and Pazzani 1996) that employ some form of learning or gradient descent, this can also create a robust ensemble.

3.5.2 Boosting

Conceptually, we could say that boosting is a process of building subsequent models on the residuals of previous models, though for model types that have no explicit measure of residuals (Schapire 2003; Schapire and Freund 2013). Adaptive boosting (AdaBoost) (Freund and Schapire 1996) reweights the training data with each iteration to emphasize the points that were not predicted as accurately in the previous iterations. Gradient boosting (Friedman 2001) computes the gradient of a fitness function in order to provide weights to each model trained. Stochastic gradient boosting (Friedman 2002) combines bagging with gradient boosting, building an ensemble of ensembles in which different gradient-boosted ensembles are built for each data sample. These methods can also be applied to any model type. The popular extreme gradient boosting (XGBoost) package (Chen and Guestrin 2016) is a highly optimized version of gradient boosting.

Many studies have been performed to compare ensemble methods (Nanni and Lumini 2009; Wang et al 2011), but the winning approach probably depends on the specific problem and data set. For example, gradient boosting has been reported to be more susceptible to outliers.

3.6 Hybrid ensembles

A very large area of research involves creating hybrid models, where specific model types are chosen that are intended to be integrated in nontrivial ways, usually via an algorithm specifically tailored to the models chosen and the application area. This is different from heterogeneous ensembles, where the forecasts are combined via one of the voting schemes in Table 6. Instead, hybrid ensembles create an architecture that leverages the specific traits of the models. The criterion for success is not about choosing which models are most orthogonal and accurate (Hansen and Salamon 1990). Rather, it involves combining models that may

- use different data sources,

- predict over different forecast horizons or

- identify different problem structures.

Thus, the models are inherently complementary, often making measures such as orthogonality or comparative accuracy undefinable.

A classic example in credit risk is the use of roll-rate models (Federal Deposit Insurance Corporation 2007) for portfolio forecasting for the first six months combined with vintage models (Breeden 2014) for the longer-horizon forecasts. In this case, the analyst would usually switch from one model to the other at a certain forecast point or use a weighting between the models that is a function of forecast horizon. Some version of this approach has been in use for decades, because roll rates are known to be accurate for the short term, and vintage models for the long term.

The list of hybrid ensembles (or hybrid models) in the literature is far too great, but the following provide a few examples: decision trees and neural networks (Langdon et al 2002), SVMs and neural networks (Abedin et al 2018; Cortes and Vapnik 1995), naive Bayes and SVMs (Min and Cho 2011), a classifier ensemble with genetic algorithms (Zhang et al 2019) and genetic algorithms with artificial neural networks (Oreski et al 2012). Some authors provide surveys of collections of hybrid ensembles generally (Ardabili et al 2019) or for specific application areas such as bankruptcy prediction (Verikas et al 2010). Hybrids combining APC models (Fu 2018; Glenn 2005; Holford 2005; Mason and Fienberg 1985) with origination scores, behavior scores, neural nets or gradient-boosted trees were created specifically to better solve the solve the problem of data sets with few economic cycles, as described above (Breeden 2016; Breeden and Crook 2020; Breeden and Leonova 2019)

4 APPLICATIONS IN CREDIT RISK

ML methods received early attention from researchers, but their adoption in opera- tional contexts has been understandably cautious for the reasons discussed in Sec- tion 6. The earliest experiments were primarily in fraud detection, credit scoring (Desai et al 1996; Henley and Hand 1997; Makowski 1985; Wes 2000; Yobas et al 2000), corporate bankruptcy and default forecasting (Odom and Sharda 1990). As ML methods have matured along the lines described above, parallel efforts occurred in the application of those techniques to areas of credit risk, resulting in a wide range of new applications.

4.1 Credit scoring

Credit scores were created to predict the relative risk of default among borrowers (Coffman and Chandler 1983; Lewis 1994). Their success as compared with human judgment was so great that they became part of the standard credit bureau offer- ing and an essential part of the lending ecosystem. These bureau scores have been developed and refined over decades, and are essentially the result of an optimization process in which disparate and complex consumer performance history has been lin- earized into factors that fit well into a logistic-regression model. This would seem to be the same kind of work done automatically by ML, but it has historically been done through human intuition and experimentation.

Anecdotally, developers of modern bureau scores are said to use ML methods to search for additional interaction terms and nonlinearities. Those lessons are taken back to the original logistic-regression-based model to create small improvements, but the advances available from ML appear to be small compared with the decades of human optimization already performed. However, Henley and Hand (1997) showed that even small enhancements to credit score performance can have significant returns.

In principle, any institution can purchase from the bureaus similar data to that which goes into creating the bureau scores and do a head-to-head test of in-house ML-model scores against those of the bureau. In any such test, the in-house model has the great advantage that the target is known. When developing a bureau score, the model is attempting to predict default without knowing what product the con- sumer will be offered, or if default will occur in the absence of new loans and bebased purely on existing loans. An in-house model is typically built to predict the outcome of offering a new loan of a specific type, potentially even incorporating the terms of that loan. Fair comparisons are difficult but perhaps unimportant. A devel- oper creating an in-house model can jump straight to sophisticated modern methods, either taking the bureau score as an input or starting fresh, in each case bypassing the decades of labor put into the original bureau scores.

ML in credit scoring is not new. Comparative surveys can be found as far back as 1994 (see, for example, Richeson et al 1994). New comparative analyses continue to appear as new methods are developed and more data becomes available. One of the most complete surveys was conducted by Lessmann et al (2015), who noted the irony that most published work on ML in credit scoring leveraged only very small data sets for comparing big-data ML methods. Lessmann et al sought to resolve that shortcoming by testing multiple methods on multiple, larger data sets. These surveys are useful both in bringing the readers up to date on the latest methods and in suggesting which methods could be best, but no single method wins in all studies (Baesens et al 2003). The obvious conclusion is that not all data sets have similar structures, and the analyst can still expect to test several approaches to find which is most effective on a specific data set. Similarly, researchers need to be careful to avoid publishing conclusions that claim one method is better than another based only on one data set over one time period.

4.1.1 Neural networks

Neural networks are one of the most extensively tested methods for credit scoring and one of the first ML methods employed (Desai et al 1996; Jensen 1992; Malhotra and Malhotra 2003; Vellido et al 1999; Wes 2000). They can function like a nonlin- ear version of dimension reduction algorithms such as PCA or as factor discovery methods in deep learning contexts. They offer additive and comparative interaction terms between variables. On the most basic level, neural networks provide a non- linear response function between input and output. With enough training data, these attributes can be a powerful combination.

The first challenge with applying neural networks is in choosing an architecture. In theory, with enough data a fully connected feedforward neural network should be able to learn its own architecture, but the reality is more challenging. Some of the biggest success stories in using deep learning neural networks required vast amounts of training to determine the metaparameters for the networks: number of inputs, number of hidden layers, number of nodes in each layers, activation functions for the nodes, etc.

Therefore, much of the work around neural networks is in how to choose or learn an optimal architecture. Genetic algorithms have been used to select the optimal setof inputs (Bahnsen and Gonzalez 2011; Yobas et al 2000). Classic genetic algo- rithms performed crossover and mutation on a binary encoding of the parameter space (Goldberg 1989). That binary encoding is rarely optimal for applications in credit risk (Breeden 1998). A more general evolutionary approach (De Jong 2006) could operate on the full architecture of the neural network in order to share optimal subnets across candidate networks within a population.

Feedforward networks are the most commonly used, largely because they are the easiest to train and comprehend. However, RNNs have been used in behavior scoring contexts (Hsu et al 2019) to create memory within the network, rather than having the analyst provide lagged inputs of dynamic variables. When the network is applied to massive amounts of input data, such as transactional information, CNNs have been used (Kvamme et al 2018).

Even with an optimal architecture, limiting overfitting (Lawrence et al 1997; Sri- vastava 2013; Tetko et al 1995) is a significant problem. Much work has been done in this area, with the surprising finding that the number of parameters in deep learn- ing networks may not be as much of a problem as we think (Belkin et al 2018). One explanation may be that the initial random assignment of many small parame- ters might actually create robustness to input noise rather than the multicolinearity nightmare we would otherwise expect.

Even worse can be transient structures that are actually present in the data but only for a short period of time. When we know that a certain structure will not persist in the future, such as an old account-management policy of an expiring government pro- gram, how do we get the neural network to forget? One answer could be the “given knowledge” approach suggested by Breeden and Leonova (2019), in which we could train a subnet on just the transient structure, embed this as a fixed component of a network trained to solve the larger problem on the full data set and then remove the subnet when creating forecasts out of sample.

Neural networks are data hungry and time intensive to train, but can be used suc- cessfully. Many authors have studied the data and time requirements, comparing dif- ferent neural network designs as well as comparing these designs with other methods (Abdou et al 2008; Baesens et al 2003; Finlay 2011; Lai et al 2006b; Malhotra and Malhotra 2003; Sarlija et al 2006; Xiao et al 2006). When the available data set is wide in the number of inputs but short in the number of observations, ensembles of small networks can also be effective (West et al 2005).

4.1.2 SVMs

SVMs excel at creating segmentations of the input vector space for classification. The ability to segment the observation space with arbitrary hyperplanes provides an effective classification technique for an arbitrary number of end states and does sowithout assumptions about the distributions of the input factors or target categories. They are less well suited to continuous prediction problems, although the techniques mentioned above can be applied to produce continuous outputs. SVMs have been applied to credit scoring by multiple authors and found to be an effective approach in many cases (Baesens et al 2003; Schebesch and Stecking 2005; Van Gestel et al 2004; Xu et al 2009).

One of the biggest advantages in using SVMs is the ability to use kernels to create optimally separating hyperplanes (OSHs). The “kernel trick” refers to the mapping of the data to a higher-dimensional space, which can in some cases dramatically sim- plify the process of finding OSHs. The placement of the hyperplanes is a nonlinear problem requiring an optimizer.

As with neural networks, the challenge is optimizing the architecture. With SVMs, the input features and the kernel parameters must be optimized. The choice of whether to use a linear, polynomial, radial basis function or other kernel is a mat- ter of experimentation given a specific data set. No universal best answer exists, but the best advice is to start simple (eg, linear) and move toward complex as required.

These choices across metaparameters are interdependent. To optimize these meta- parameters, genetic algorithms have again been applied (Frohlich et al 2003) in addition to other hybrid approaches (Huang et al 2007). The lesson from studies into neural networks and SVMs is that optimizing the metaparameters is essential to success.

4.1.3 Decision trees

Decision trees are a simple concept that can be used to create sophisticated mod- els. The concept is a recursive partitioning of the input space until we have enough confidence to make a prediction. They have been used for decades in credit risk (Davis et al 1992; Frydman et al 1985; Makowski 1985), where the earliest decision trees were heuristically created. Modern algorithms can use a variety of partitioning criteria: misclassification error, Gini index, information gain, gain ratio, analysis of variance (ANOVA) and others. The final forecast can be the state with the great- est representation in the final leaf, a probability based on representation or a small model, as in regression trees (Breiman et al 1984; Finch and Schneider 2007). The metaparameters are how to optimize the partitioning, the input factors and when to stop partitioning. As usual, these need to be optimized.

A single tree can have the same overfitting concerns as previous methods, but the explosion in the use of decision trees has spurred on by the introduction of ensem- bles. Bagged decision trees (Zhang et al 2010), boosted decision trees (Bastos 2007), random forests (Ghatasheh 2014; Kruppa et al 2013; Malekipirbazari and Aksakalli 2015), rotation forests (Marques ́ et al 2012; Nanni and Lumini 2009) and stochasticgradient-boosted trees (Chang et al 2018; Twala 2010) are some of the most popu- lar. Most authors agree that this list represents a steady improvement in methodol- ogy, currently with stochastic gradient-boosted trees as the usual winner. Although ensemble methods are most popular in scoring when applied to decision trees, these methods are found combined with all credit scoring techniques (Abellan and Mantas ́ 2014).

One advantage of decision trees is the mapping between trees and rules. Trees can be compared with known rules, and rules can be learned from trees (Chen and Hung 2009).

In general, trees have an advantage in handling sparse data or data with outliers. Binning is a simple method to limit outlier sensitivity that is lacking in continu- ous methods like neural networks. In situations where the data is abundant, of good quality and with clear nonlinearities, neural networks are often the reported winners.

4.1.4 Nearest neighbors and case-based reasoning

One category of models could be defined as those that learn from past examples. Case-based reasoning (CBR) (Buta 1994) searches through past lending experiences to find a comparable loan. In commercial lending, where examples are few and nearly unique, this can be an effective approach. Where most data is available, as with consumer lending, a k-nearest neighbors (kNN) approach (Henley and Hand 1997; Marinakis et al 2008) is conceptually equivalent.

The challenge with both CBR and kNN lies with identifying comparables. This is not unlike the challenge for home appraisals. If the closest comparable home is at a distance, in a different kind of neighborhood, is it really comparable? This concept applies to both methods here. Any data set will be nonuniformly distributed along the explanatory factors. When optimizing the metric for identifying comparable loans or choosing k in kNN, the definition of a near neighbor that works well in one region of the space may be a poor choice in another.

Using geography as an example, finding 20 neighbors in an urban setting might provide a roughly homogenous set, whereas finding the same 20 neighbors in a sparse geography could span counties or even states. Of course, using CBR or kNN geographically could create a redlining risk, but the same concept applies, if more abstractly, to any set of explanatory factors. Therefore, the success of these methods appears to be tied to the uniformity of the distribution of the data set.

Where CBR and kNN may excel is in extremely sparse-data situations. When tens of events or less are available, especially when the events are very heterogeneous in their properties, matching to prior experience without attempting to interpolate or extrapolate as in estimation-based approaches may be more effective.

4.1.5 Kernel methods, fuzzy methods and rough sets

Kernel methods, fuzzy methods and rough sets are best viewed as methods to aug- ment other modeling approaches. Decision trees, SVMs or any method that performs classification by drawing hard boundaries between the input factors will inevitably have uncertainty in the location of those boundaries. In general, we would assume that the greatest forecast errors should occur near the boundaries. Incorporating esti- mation kernels into these methods (Chalup and Mitschele 2008; Yang 2007) or treat- ing the boundaries as fuzzy (Hoffmann et al 2007) can capture this uncertainty and potentially improve accuracy by reporting appropriate probabilities. Estimation ker- nels or fuzzy logic have been incorporated into many credit scoring methods (Grace and Williams 2016; Wang et al 2005; Yu et al 2009; Zhou et al 2011). This may be particularly valuable in sparse-data settings where the boundaries can only be approximations.

Rough sets have also seen application to credit scoring (Mckee 2000). With an objective similar to kernel and fuzzy methods, rough sets have been combined with other base modeling techniques to incorporate the imprecision of the available infor- mation. Along these lines, rough sets have been combined with decision trees (Zhou et al 2008) and with SVMs (Chen and Li 2010).

4.1.6 GP

Genetic programming employs trees to perform computation. The leaves are input values or numerical constants. The branching nodes contain numerical operators or functions. In this way, nested algebraic operations can be performed to create predictions for credit scoring (Abdou 2009; Huang et al 2006a; Ong et al 2005).

The genetic aspect refers to how the tree structure, constants and input factors are chosen. As with genetic algorithms, concepts of mutation and crossover are employed. Mutation is a simple change in a constant, swapping an input factor or swapping an operator or function. Crossover is the more interesting process of swap- ping subtrees between two trees. In genetic algorithms applied to binary representa- tions, crossover rarely produces viable offspring, because the fitness landscape lacks useful symmetries. In applications of GP to credit scoring, such symmetries exist if subtrees can capture conceptual subsets of the problem, eg, by swapping the proper transformation of an input factor between candidate trees.

For credit scoring, the fitness function will be one or several measures of the accu- racy in predicting the target variable. The optimization naturally occurs on an ensem- ble of candidate trees. The best tree at the end of the optimization process can be used as the model, but following the ensemble concept a voting algorithm could also be applied across all qualifying trees. However, one challenge with genetically learned ensembles is that they tend to cluster around a single peak in the fitness landscape.A similarity penalty could be added to the fitness function to encourage diversity in the population, both to reduce the risk of becoming stuck in a local optimum and to increase the usefulness of the ensemble.

GP appears to be useful as a highly nonlinear method. To justify the slow search speed of genetic methods, we would need a problem that is equally complex. Simple credit scoring problems may not qualify, but the use of alternate data sources might make GP more interesting.

4.1.7 Alternate data sources

Some ML methods for credit scoring are specifically focused on how to incorporate new data sources into the scores. Cashflow analysis using data scraped from demand deposit accounts is an area of successful business application, particularly during the Covid-19 pandemic, when so much traditional scoring data is in doubt. Although the data source is new to scoring, the methods for analysis are more traditional. Ana- lysts have sought to determine the frequency and reliability of income from differ- ent sources. During the Covid-19 pandemic, someone with periodic, steady income could be a good credit risk regardless of credit score, industry of employment or many other underwriting criteria.

Mobile phone data is potentially an important data source in emerging markets, specifically for underbanked consumers. Research on credit risk for Chinese con- sumers using mobile phone calling records and billing information has been found to be effective for credit risk assessment (Wang 2019). Research has also been done in underdeveloped markets using smartphone metadata such as types of apps installed, text message history, etc (Liu et al 2017). Both studies used well known credit scor- ing and ML methods, just with emphasis on sourcing and regularizing new data types.

Some novel data sources require corresponding innovations in analysis. Social net- work data has proven to be quite interesting (Freedman and Jin 2017; Wei et al 2016), but incorporating data from networks into a credit score can be a challenge. Low- dimensional embeddings of network graphs (Hamilton et al 2017) are the standard approach to creating a usable input factor for modeling. However, recent research (Seshadhri et al 2020) suggests that low-dimensional embeddings lose much of the information in the network. The best approach for incorporating social network data will continue to be a topic of research for some time, as will the ethics and legality of incorporating such data into the underwriting process.

4.2 Corporate defaults

Discussions of credit scoring usually carry an implication of consumer loans and large volumes of training data. Modeling corporate defaults and bankruptcies is asimilar problem, but with fewer events in the training data and less standardized inputs. A panelist at a conference on ML in finance explained humbly that they used ML just to read the corporate financial statements. The scoring models were trivial. In fact, standardizing diverse and heterogenous inputs may be one of the best uses of ML in lending applications.

Even so, some large data sets on corporate defaults do exist, and a variety of papers have been published to apply ML to the problem (Anagnostou et al 2020; Shin and Han 2001; Vahid and Ahmadi 2016). Bankruptcy and default are not exactly the same thing, but because bankruptcy filings are public many works have focused on these (Atiya 2001; Coats and Fant 1993; Mckee 2000; Min and Lee 2005; Odom and Sharda 1990; Shin and Lee 2002; Vassiliou 2013). Across both applications, the methods tested cover the full range of ML techniques.

Models for lending to small and medium-sized enterprises falls in between con- sumer and commercial approaches, because the performance is more closely tied to a small group of owners. Although less data is available, some testing of ML meth- ods has been performed as well (Fritz and Hosemann 2000; Li et al 2016; Zhu et al 2017). Notably, many fintech firms have arisen to address this market using alternate data sources and ML methods.

4.3 Other scoring applications

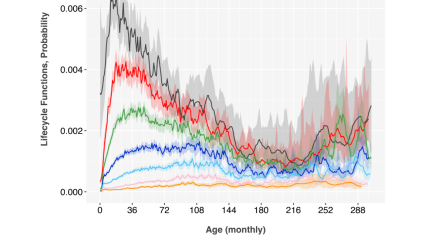

Published work often lags behind what is being done in house at lenders around the world. For example, the author knows that prepayment and attrition models have been created using ML, with the short study in Section 5 as one such previ- ously unpublished example. At this point, we can assume that ML is being tested everywhere models can be employed in lending.